Luminance Processing – Making the IFN pop

Intro

The purpose of this tutorial is to show how, mainly with histogram adjustments, very faint detail – in this case integrated flux nebulosity or IFN – can be extracted from an image to a point it would be similar to the “faintness” we would find in brighter objects. In other words, this is not a “from raw to jpeg” tutorial.

The image

The data is from the image Integrated Flux World. I originally captured the first 8 frames on April 6, 7th and 8th, 2010, and I decided to process them after a not very promising weather forecast ahead that would delay the capture of the last two frames. However, one week later, on April 16th, I was able to get out one more time and captured these two additional frames. Since I didn’t want to start processing everything all over again, I processed these two frames individually before bringing them to the larger mosaic. Processing a mosaic in several steps however is not always recommended!!

This tutorial describes the first part of the luminance processing for these two frames. The two master luminance files are available upon request.

NOTE: The screen-shots are wide because they were taken on a 2-monitor system, which is how I usually work, generally placing the image on my best monitor and the processing tools on the “bad” one 🙂 This, unfortunately, forced me to reduce the resolution of the screen-shots. However, if you would like to see the screen-shots at their full resolution, simply click on the corresponding “tiny” screen-shot (JPEG compression artifacts are however present and do impact the quality of the image).

The Data

The captured data was of good quality, but not a lot of it. The master files were generated from 6 subexposures of 15 minutes each, with a SBIG STL11k camera and a Takahashi FSQ106EDX telescope with the 0.7x reducer. The data was captured at Lake San Antonio, California – a dark site that sits right at a “gray” border in the Bortle scale, surrounded by blue and some green/yellow areas. For a light pollution map of Lake San Antonio, click here and look for its location on the bottom-right corner of the image.

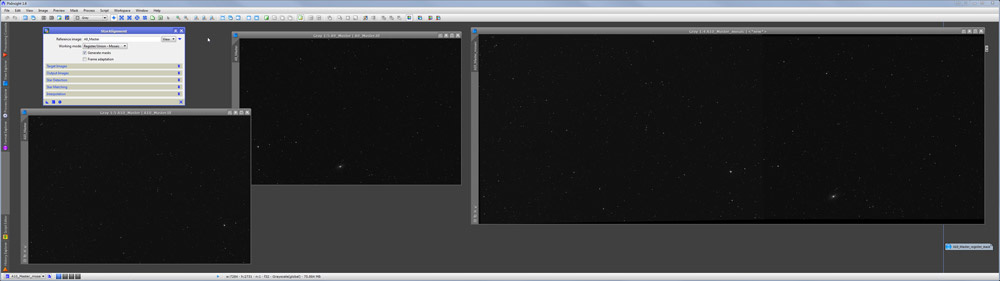

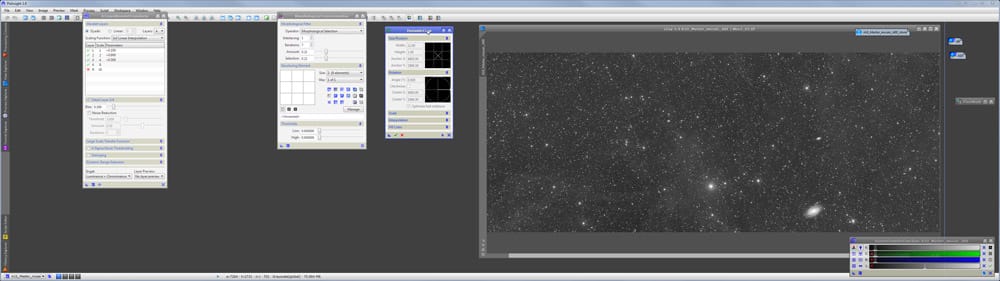

1 - Aligning and balancing the mosaic frames

First, both frames are registered and calibrated individually, with their darks, flats (a unique set for each frame) and bias. I used DeepSkyStacker for that, but of course, any other software (PixInsight, MaximDL, CCDStack, etc) could be used as well. After that, we load both master luminance files in PixInsigth and using a STF, we crop the images to eliminate “bad” edges. After this step, I would usually adjust for background gradients using PixInsight’s DBE.

After the two master luminance files are ready to be put together, we run the StarAlignment tool, setting it to “Register/Union Mosaic” and selecting the “Generate masks” option (we’re going to need those masks). The “Frame adaptation” option is nice in general cases to correct for differences in background illumination and signal strength, however, I’ve noticed it sometimes can wipe out very faint signal in the background. Since our goal here is to reveal as much IFN as possible, we do not check that option.

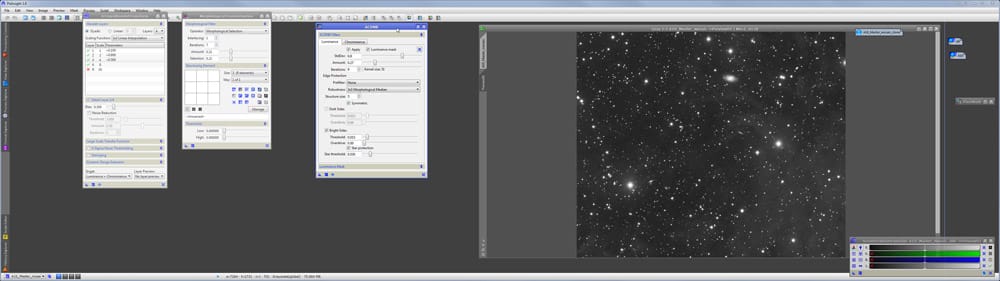

After StarAlignment has done its job, we can see both frames already nicely put together. As it usually happens with astroimages, the images are very dark and we can barely see anything but stars and a faint trace of M81.

We now run the ScreenTransferFunction (STF from now on) and click the “A” icon to let PixInsight do a very aggressive automatic screen stretch, so we can see what’s there. Remember that STF does not change our data, it only “stretches” it for our screen.

After this aggressive screen stretch we can already see there’s “stuff” in the background. We also see that the background illumination and the signal strength are not uniform among both frames.

We now apply the mask generated by StarAlignment, so everything we do will only affect one frame (the one we want to correct for background and signal uniformity), and using PixelMath we enter the formula ($T – Med(A9))*k1 + Med(A9)*k2 , where $T is the image to which we’ll apply the PixelMath equation, and A9 is the second frame. The first part of the equation ($T – Med(A9))*k1) corrects for differences in signal strength, while the second part Med(A9)*k2 deals with differences in background illumination. The two variables k1 and k2 are calculated by trial and error, until we find the best seamless transition between the two frames. In this case, the best values seemed to be 0.99 for k1 (corrections in signal strength) and 1.121 for k2 (background). Notice the formula does not break the linearity of the data. This method to build a seamless mosaic, including the formula, is the work of Juan Conejero from PixInsight.

UPDATE: When this tutorial was written back in 2010, PixInsight offered no tools dedicated to produce seamless mosaics. A few years ago, PixInsight started to include several powerful new tools that make build seamless mosaics a breeze. You’re welcome to use the approach described above if you like, but using the more advanced tools is highly recommended.

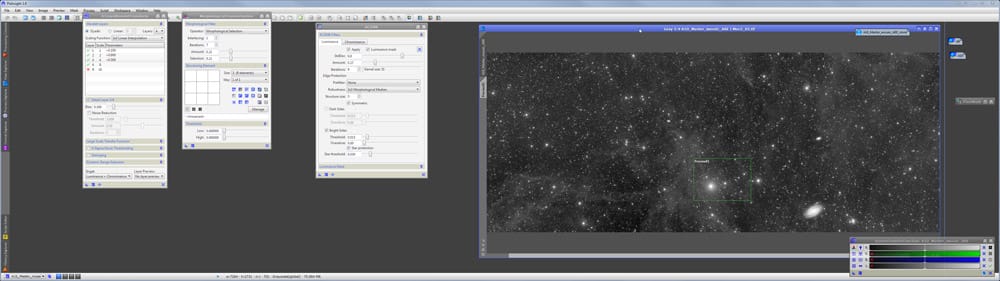

After applying PixelMath, we can see that the images now match much better. If we still saw some differences, they should be corrected at this point, backing up and applying one or more DBE (background models) before the frame adaptation process.

Remember that what we’re seeing is a very aggressive screen stretch, and that other than the linear correction we just made with PixelMath, the data is still untouched (if we ignore the registration and alignment process), and still linear. This means that if we wanted to perform some tasks that are better done when the image is still linear, such as a deconvolution, we could do it. A deconvolution in this example would probably cause more trouble than what is worth, though.

Now we’ll crop the image to get rid of the funky borders:

2 - Histogram adjustments, then some more

In some situations I would do a DBE right at this point. Although a carefully executed DBE could improve the uniformity of our background, I chose not to do it in this case, to avoid constructing a background model that could alter the natural but very faint illumination from the IFN. This is not a problem with DBE but rather, I’m trying to avoid a possible problem with me!

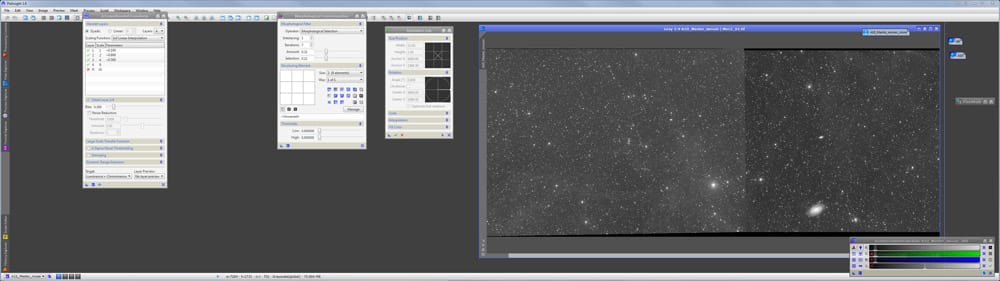

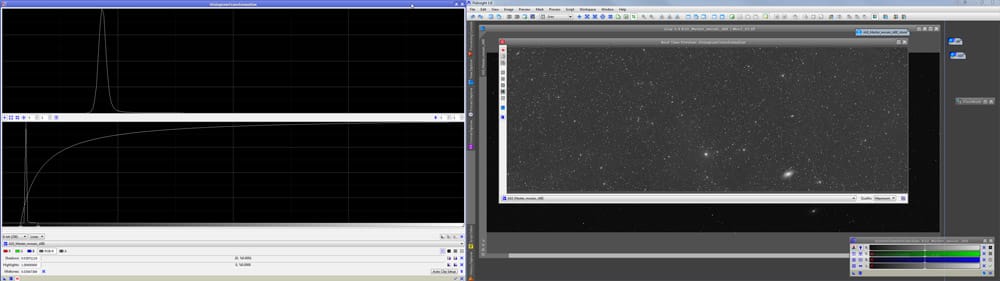

We’re ready then, for a first non-linear histogram stretch. This means we’ll deactivate the STF now, as, from now on, we want to see what we’re really doing.

This first histogram stretch doesn’t do much to bring out the faint detail we seek. Also notice that when I do histogram adjustments that I consider critical, I resize the histogram dialog a lot, covering my entire left monitor! This by the way, is not a requirement, just something very nice to have 🙂

If you look closely, in this stretch, PixInsight is telling me I’m “dark clipping” 20 pixels (a 0.0001% of the pixels in the image). If you click on the screenshot, the top number under the histogram graph in the middle is giving us that information.We definitely do not want to clip the histogram, especially at this early stage, but something like 0.0001% is acceptable. 20 pixels, that’s okay.

Some people say that histogram adjustments should be done just once. Personally, I not only don’t see why, I think that more than one histogram adjustment, if done carefully, does more good than bad. So here’s a second histogram adjustment.

This time we’re clipping the black point a 0.1177% according to PixInsight. That’s still very ok. It’s still a very insignificant amount and it helps us get some contrast. Also notice that to perform this stretch, I zoomed in the X axis of the histogram by a factor of 9. This helps a lot in finding the point where the histogram curve starts to rise. Likewise we could zoom in the Y axis if we liked. The histogram tool in PixInsight is, no doubt, the way a histogram tool should be for processing astro-images.

QUICK NOTE: Although I believe no processing tutorial should be understood as a cooking recipe, if you really want to replicate the exact histogram adjustments I did in this example, click on the screenshot to view the large image, and take note of the Shadows/Highlights/Midtones values.

Hmm… My notes say I only did two histogram adjustments in a row, but my screenshots show a third one. Ok, that’s just fine 🙂

The truth is, I will perform as many histogram adjustment iterations as I feel I need, as long as the resulting image looks better to me, I’m not clipping it, and I’m not going overboard either. When I see that I cannot improve the image to my satisfaction anymore (again, without clipping it), then I stop.

This may be a good time to see what’s going on with the galaxy M81 (the big white smudge on the bottom-right, around 5 o’clock)…

As you can see, after these histogram stretches, M81 is starting to look a bit saturated. It’s not completely blown up, but it’s getting there…. Here’s the deal… I know that in the 8 mosaic frames I’ve already processed, M81 is already there (barely, but it’s there), nicely processed an all, so when it’s time to overlap these two frames with the already processed mosaic, I’ll just place this layer under the already processed image. In other words, I’m not too concerned about M81 in this image, which gives me more freedom to work my way to make the IFN pop nicely.

Having said that, the short answer for dealing with this is of course using a “gradual lightness-based mask with variable mid tones and white point”. Ok, that was a mouthful, but in practice is very simple. The trick is, we don’t want the mask to fully protect the galaxy, this being done in two different but related fronts:

- By building the mask based on the lightness, the mask would be gradual, that is, it will be brighter in the brighter areas of the galaxy (protecting them better from saturating), and fainter in the outer arms.

- In addition to that, we do NOT want the mask to fully protect our image. That is, we want our stretches to actually affect the galaxy, just not nearly as much as everything else. To do that we “dim” the mask by lowering its white point. This process of adjusting the white point of the mask right where the galaxy is should be iterative for every new histogram stretch we perform – that is, after we’ve done our stretch, with the mask in place, we adjust the histogram of the mask until we see the galaxy (or whatever other object we’re protecting) blend well with its surroundings. Of course we work this with a real-time preview.

3 - This is getting noisy

If our goal is to reveal all this faint stuff in the background, we can see we’re starting to get what we want. However, this comes to a price: our stars are getting fatter (especially the very small stars are becoming anything but subtle details) and as we stretch this faint signal, we’re also bringing up a lot of noise, and most of this signal we want so much shows itself as having a very poor signal-to-noise ratio. As for the stars, since we have not adjusted the white point even once, the problem is not very severe, fortunately.

If you click on the screen-shot you’ll see the image really is getting noisy. Unfortunately, the JPEG compression even for the “real sized” screen-shot, and the fact that even the large screen-shot shows the image at a reduced size, doesn’t give a good picture of what we’re dealing with, but let’s just say that yes, the image is getting noisy because not only we just stacked 6 subexposures during stacking/calibration, but the IFN sits barely above the noise.

So in any case it’s clear that we need to solve this two problems, and quickly. It makes sense to “correct” for the noise first, as the operations to correct the stars will work better on an image with a better SNR.

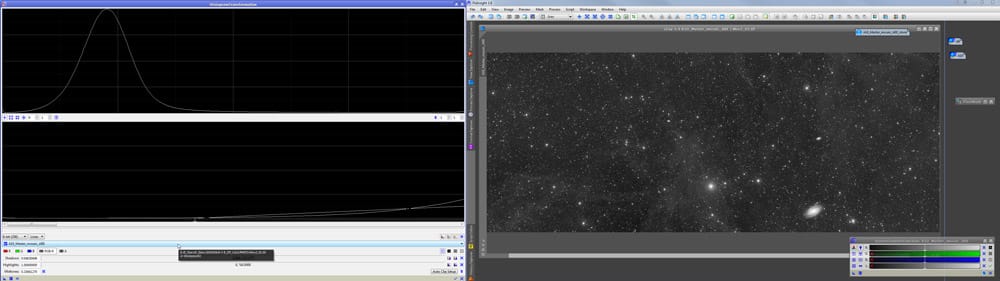

To deal with the noise, my favorite tool is the ACDNR tool from PixInsight. ACDNR is a very CPU intensive operation, so while we tweak the parameters, it’s better to experiment with a preview, as shown in the screen-shot below. We try to select an area in our preview that has some variety: very low SNR areas, low SNR areas and if possible, also some high SNR areas. We don’t really have any area high in SNR here, so an area with very low and low SNR areas works ok.

A good understanding of all the parameters in ACDNR is important.

In this case, we definitely check the “lightness mask” option. We also used the default edge protection values. I’m not sure why I didn’t take advantage of the prefiltering process, as it does an excellent job with very noisy images (maybe I did, but my notes don’t mention it and I’m just reading from the screen-shot). I would definitely suggest to preview the effects of using the prefiltering process in ACDNR. Regardless…I chose the morphological median “robustness” as it works better to preserve sharp edges.

As for the amount and iterations parameters, I always favor small amounts but several iterations (it usually works better and more gradually). Last, I used a high value for the standard deviation because the main purpose of reducing the noise at this point – although we know there’s going to be little background “free” in our image – is to attack what otherwise would be considered “background noise”, and high StdDev values usually work best for that. This is somewhat contrary to what using a high value as StdDev really means: it means we’re going to reduce large scale noise, and what we have here is noise at different scales. But it works well, as long as you don’t overdo it.

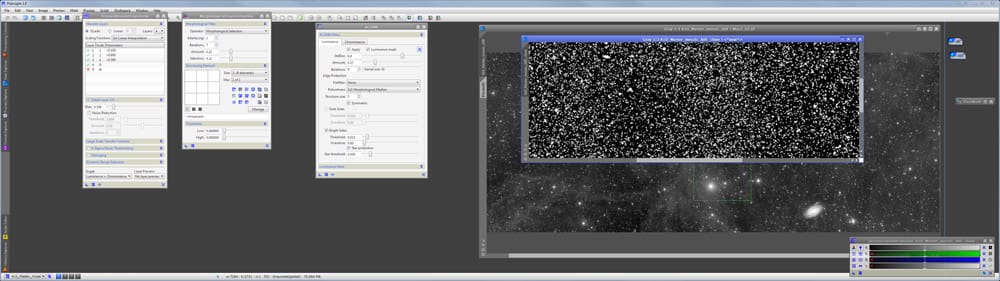

After applying the ACDNR process, I go, once again, to the histogram tool, and see if something can be squeezed out of there now that some of the noise is gone. Usually, there is, and our faint stuff is looking better. Here’s the image after our fourth histogram adjustment. It may look dirty (I don’t claim all the noise is gone!) but in this small screen-shot, what you feel it’s noise, is in fact, caused by the thousands of stars that are overwhelming the image. That and the JPEG compression artifacts in the screen-shot.

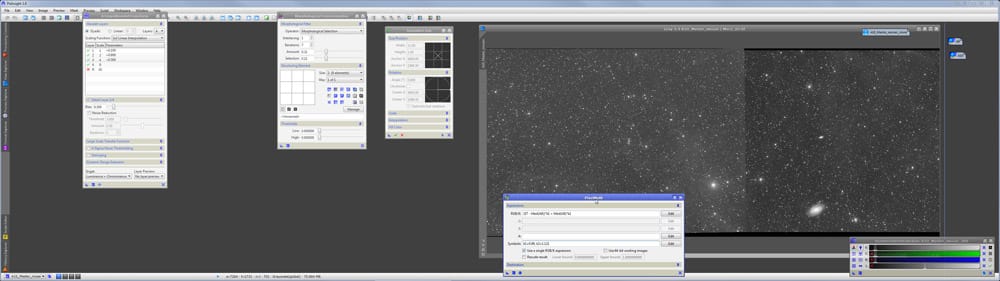

4 - Star ``management``

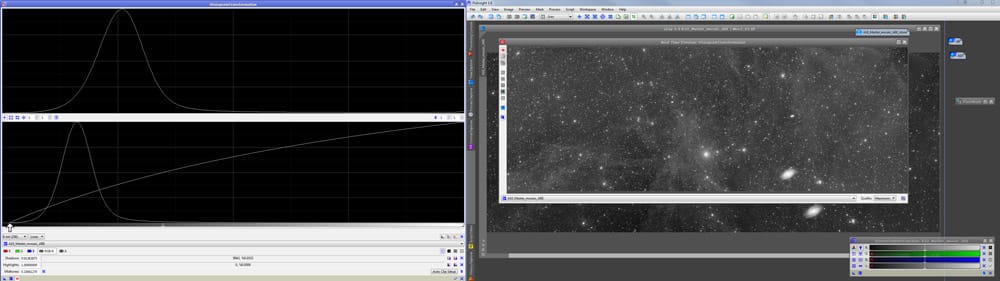

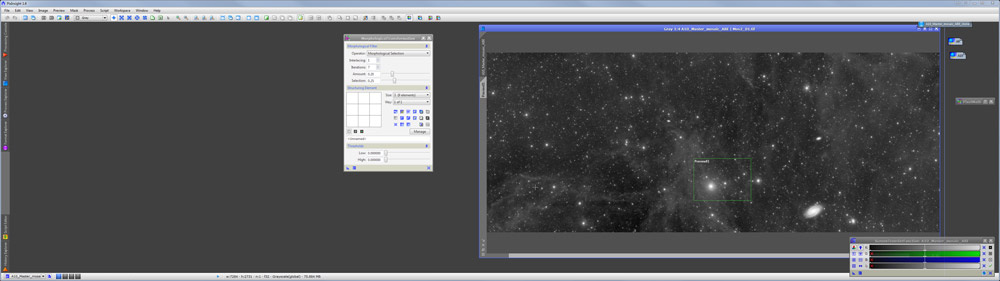

Now that we’ve stretched our image a great deal and somewhat dealt with the noise, it’s time to do something about the stars. For this, we’ll use the MorphologicalTransformation (MT) tool in PixInsight. For those not familiar with MT, you can think of it as an extremely fancy and customizable maximum/minimum filter – but seriously extremely versatile!!

Applying a MT to an image to correct the stars unquestionably requires a star mask that protects everything but the stars. Without a star mask, the MT would be applied to everything in the image. Not only the results will not be satisfactory, a true MT takes a well-defined structuring element as a model for the transformation. In this case the structuring element is a circle (stars are, well, round), and so it makes sense to apply the transformation only to circled structures. A star mask that protects everything but the stars does that.

So we start by creating a good star mask…

A star mask can be easily created with PixInsight’s StarMask module, although for this occasion I chose to create it “manually” using the ATrousWaveletsTransform (ATWT) tool. This is easy – just eliminate from a duplicate of the lightness the “R” (residual) layer, on a 4 layers dyadic sequence, and we’re done.Of course, you can do further adjustments to your mask, depending on what you want the mask to accomplish: increase the bias level at different layers, or the noise reduction, etc.

I also often like to binarize the star mask and then blur it a bit with a Gaussian Blur – MT will work well with such strong masks. In this case however I chose to simply adjust the white/mid points of the histogram to make the mask stronger – which also works well. Here’s how the star mask looks like:

And here’s our image protected by the star mask (the areas in red are the protected areas):

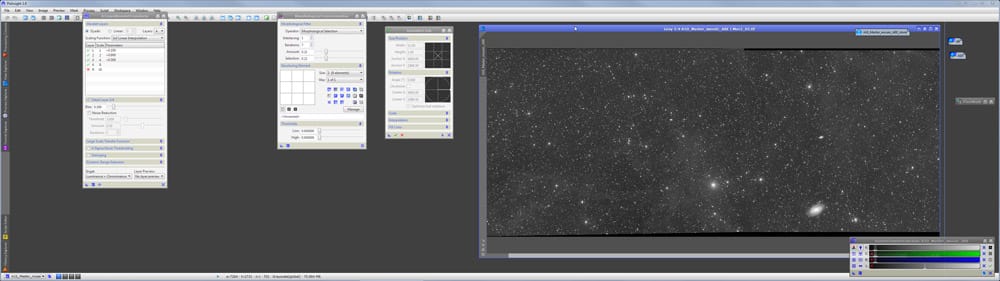

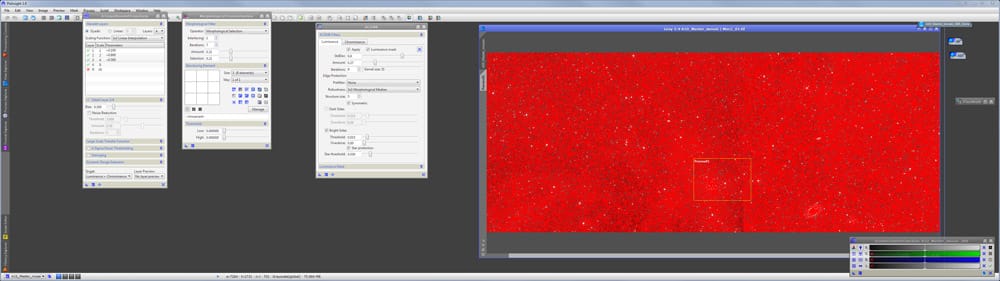

With everything but the stars protected, we adjust the parameters of the MT and apply. In this case I opted for a small structuring element (3×3) as it seemed to work better than larger ones which were introducing a few halos around some stars. These halos can be corrected by adjusting MT’s threshold parameters, but in this case I just went with the 3×3. I used the Morphological Selection as my operator choice. Most people tend to use the “erosion” or “closing” methods but “morphological selection” is usually my favorite because it acts as a blend between the erosion and dilation methods, and then I can just adjust the Selection slide to emphasize either the dilation or the erosion effect, but other operators can also do a decent job.

Here’s the image after the morphological transformation. Notice how the very tiny stars are no longer disturbing the scene, and the longer ones are not “in your face” anymore. This also helped clean up the image from the “in your face” panorama of an overwhelming field of stars.

Final words

Although the image is certainly not finished, as a result of our last operation, the IFN has become the main “attraction” in our image, and we can see wisps of it all over the place. Here’s a slightly bigger view of the image as it is at this point (click on the screen-shot for a larger version):

As I just mentioned, the processing of our luminance data is clearly not finished. We need to reduce the flux and halos in the brighter stars if we like (I often do). And then comes the color, which, after processed independently, will make us do some adjustments to the image after combining it with the lightness (delinearized luminance). And on top of that, in this particular case we’ll then need to make sure everything will match nicely with our already processed 2×4 mosaic (one big reason why mosaics should be processed all at once, and not in pieces, if at all possible).

The good part is, we’ve achieved our goal of making the IFN pop nicely, without an overwhelming amount of noise (although the compressed JPEG artifacts may give the wrong impression) or the stars “eating” up the view, and further processing on this image shouldn’t be as demanding and more similar to processing a “regular” astro-image.

We haven’t used the curves tool, DDP, wavelets (other than to build a star mask), HDR tools, etc. In a nutshell, all we’ve really done is:

- Three histogram stretch iterations

- One pass at reducing the noise

- Another histogram stretch

- One morphological transformation to reduce star size and impact

- One last histogram stretch

In the next tutorial, also using this image of the IFN as example – just not these two frames – I will describe a way to make pop some of the very faintest stuff on this particular image that are sitting in an area that after all the techniques described in this tutorial and more, was still presenting a very dark sky background – using some simple multi-scale techniques.